![]() Home > Internet & Media

Home > Internet & Media

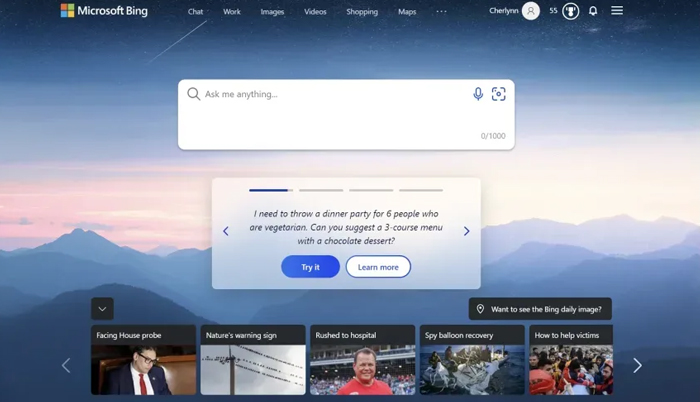

Microsoft Gives Bing's AI Chatbot Personality Options

Microsoft

![]() March 3rd, 2023 | 16:06 PM |

March 3rd, 2023 | 16:06 PM | ![]() 427 views

427 views

CALIFORNIA, UNITED STATES

You can make the chatbot more entertaining or direct.

Microsoft has quickly acted on its promise to give you more control over the Bing AI's personality. Web services chief Mikhail Parakhin has revealed that 90 percent of Bing preview testers should see a toggle that changes the chatbot's responses. A Creative option allows for more "original and imaginative" (read: fun) answers, while a Precise switch emphasizes shorter, to-the-point replies. There's also a Balanced setting that aims to strike a middle ground.

The company reined in the Bing AI's responses after early users noticed strange behavior during long chats and 'entertainment' sessions. As The Verge observes, the restrictions irked some users as the chatbot would simply decline to answer some questions. Microsoft has been gradually lifting limits since then, and just this week updated the AI to reduce both the unresponsiveness and "hallucinations." The bot may not be as wonderfully weird, but it should also be more willing to indulge your curiosity.

The toggle arrives as Microsoft is expanding access to the Bing AI. It brought the technology to its mobile apps and Skype in late February, and days ago made the feature available through the Windows 11 taskbar. The flexibility could make the AI more useful in these more varied environments, and adds a level of safety as more everyday users give the system a try. If you choose Creative, you likely know not to explicitly trust the results.

Source:

courtesy of ENGADGET

by Jon Fingas

If you have any stories or news that you would like to share with the global online community, please feel free to share it with us by contacting us directly at [email protected]